import numpy as np

import matplotlib.pyplot as plt

import tensorflow as tf

from tensorflow import keras

from tensorflow.keras import optimizers

from tensorflow.keras.layers import Dense, Flatten, Conv2D, MaxPooling2D, BatchNormalization, Input

import time▶ 이전 CNN 코드

* CIFAR10 적용 전 CNN 복습

(raw_train_x, raw_train_y), (raw_test_x, raw_test_y) = tf.keras.datasets.mnist.load_data()

train_x = raw_train_x/255

test_x = raw_test_x/255

train_x = train_x.reshape((60000, 28, 28, 1))

test_x = test_x.reshape((10000, 28, 28, 1))

train_y = raw_train_y

test_y = raw_test_y# 일부만 사용(시간상)

train_x = train_x[:10000] # ADDED

train_y = train_y[:10000] # ADDEDmodel = keras.Sequential()

model.add(Input((28,28,1)))

model.add(Conv2D(32, (3, 3)))

model.add(MaxPooling2D((2, 2)))

model.add(Conv2D(64, (3, 3)))

model.add(MaxPooling2D((2, 2)))

model.add(Flatten())

model.add(Dense(10, activation='relu'))

model.add(Dense(10, activation='relu'))

model.add(Dense(10, activation='softmax'))

model.compile(optimizer="adam", loss="sparse_categorical_crossentropy", metrics=["accuracy"])

model.summary()

model.fit(train_x, train_y, epochs=5, verbose=1, batch_size=128)

loss, acc = model.evaluate(test_x, test_y)

print("loss=",loss)

print("acc=",acc)

y_ = model.predict(test_x)

predicted = np.argmax(y_, axis=1)

print(predicted)

| Layer (type) | Output Shape | Param # |

| conv2d (Conv2D) | (None, 26, 26, 32) | 320 |

| max_pooling2d (MaxPooling2D) | (None, 13, 13, 32) | 0 |

| conv2d_1 (Conv2D) | (None, 11, 11, 64) | 18496 |

| max_pooling2d_1 (MaxPooling2D) | (None, 5, 5, 64) | 0 |

| flatten (Flatten) | (None, 1600) | 0 |

| dense (Dense) | (None, 10) | 1010 |

| dense_1 (Dense) | (None, 10) | 110 |

| dense_2 (Dense) | (None, 10) | 110 |

▶ CIFAR10 적용

# (raw_train_x, raw_train_y), (raw_test_x, raw_test_y) = tf.keras.datasets.mnist.load_data()

(raw_train_x, raw_train_y), (raw_test_x, raw_test_y) = tf.keras.datasets.cifar10.load_data()

print(raw_train_x.shape)

print(raw_train_y.shape)

print(raw_test_x.shape)

print(raw_test_y.shape)

train_x = raw_train_x/255

test_x = raw_test_x/255

# train_x = train_x.reshape((60000, 28, 28, 1)) # COMMENT OUT

# test_x = test_x.reshape((10000, 28, 28, 1)) # COMMENT OUT

train_y = raw_train_y

test_y = raw_test_y→ (50000, 32, 32, 3) (50000, 1) (10000, 32, 32, 3) (10000, 1)

labels = ["airplane", "automobile", "bird", "cat", "deer", "dog", "frog", "horse", "ship", "truck"]

def show_sample(i):

print(raw_train_y[i][0], labels[raw_train_y[i][0]])

plt.imshow(raw_train_x[i])

plt.show()

for i in [2, 10, 12, 14]:

show_sample(i)9 truck

4 deer

# 데이터 불러오는 시간관계로 일부만 사용한다.

train_x = train_x[:10000] # ADDED

train_y = train_y[:10000] # ADDEDmodel = keras.Sequential()

# model.add(Input((28,28,1)))

model.add(Input((32,32,3)))

model.add(Conv2D(32, (3, 3)))

model.add(MaxPooling2D((2, 2)))

model.add(Conv2D(64, (3, 3)))

model.add(MaxPooling2D((2, 2)))

model.add(Flatten())

model.add(Dense(10, activation='relu'))

model.add(Dense(10, activation='relu'))

model.add(Dense(10, activation='softmax'))

model.compile(optimizer="adam", loss="sparse_categorical_crossentropy", metrics=["accuracy"])

model.summary()

model.fit(train_x, train_y, epochs=5, verbose=1, batch_size=128)

loss, acc = model.evaluate(test_x, test_y)

print("loss=",loss)

print("acc=",acc)

y_ = model.predict(test_x)

predicted = np.argmax(y_, axis=1)

print(predicted)

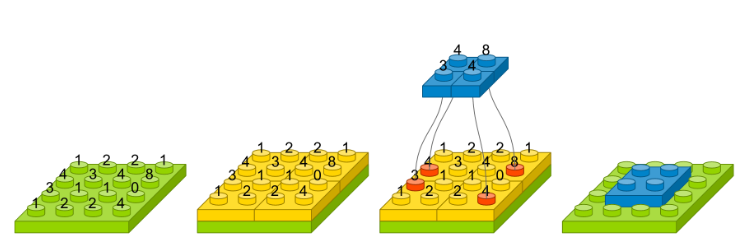

→ model.add(Conv2D(32, (3, 3)))

1. 첫번째 인자 : 컨볼루션 필터의 수 입니다.

2. 두번째 인자 : 컨볼루션 커널의 (행, 열) 입니다.

→ model.add(MaxPooling2D((2, 2))) : 주요값만(max) 뽑아 크기가 작은 출력 영상을 만드는 함수(사소한 변화를 무시함)

1. 첫번째 인자 : 수직

2. 두번째 인자 : 수평

| Layer (type) | Output Shape | Param # |

| conv2d_2 (Conv2D) | (None, 30, 30, 32) | 896 |

| max_pooling2d_2 (MaxPooling2D) | (None, 15, 15, 32) | 0 |

| conv2d_3 (Conv2D) | (None, 13, 13, 64) | 18496 |

| max_pooling2d_3 (MaxPooling2D) | (None, 6, 6, 64) | 0 |

| flatten_1 (Flatten) | (None, 2304) | 0 |

| dense_3 (Dense) | (None, 10) | 23050 |

| dense_4 (Dense) | (None, 10) | 110 |

| dense_5 (Dense) | (None, 10) | 110 |

|

Total params: 42,662 Trainable params: 42,662 Non-trainable params: 0 |

||

▶ 영상 이미지 증강

* ImageDataGenerator를 사용

(raw_train_x, raw_train_y), (raw_test_x, raw_test_y) = tf.keras.datasets.cifar10.load_data()

print(raw_train_x.shape)

print(raw_train_y.shape)

print(raw_test_x.shape)

print(raw_test_y.shape)

train_x = raw_train_x/255

test_x = raw_test_x/255

train_y = raw_train_y

test_y = raw_test_y(50000, 32, 32, 3) (50000, 1) (10000, 32, 32, 3) (10000, 1)

train_x = train_x[:10000]

train_y = train_y[:10000]# ADDED START

from tensorflow.keras.preprocessing.image import ImageDataGenerator

datagen = ImageDataGenerator(

rotation_range=10, # 0 ~ 180 회전

width_shift_range=0.1, # 좌우로 얼마나 움직이는가

height_shift_range=0.1, # 상하로 이동

fill_mode='nearest', # 움직이다 나오는 빈공간을 채우기

horizontal_flip=True, # 좌우로 회전

vertical_flip=False # 상하로 회전

)

# ADDED ENDmodel = keras.Sequential()

model.add(Input((32,32,3)))

model.add(Conv2D(32, (3, 3)))

model.add(MaxPooling2D((2, 2)))

model.add(Conv2D(64, (3, 3)))

model.add(MaxPooling2D((2, 2)))

model.add(Flatten())

model.add(Dense(10, activation='relu'))

model.add(Dense(10, activation='relu'))

model.add(Dense(10, activation='softmax'))

model.compile(optimizer="adam", loss="sparse_categorical_crossentropy", metrics=["accuracy"])

model.summary()

# model.fit(train_x, train_y, epochs=5, verbose=1, batch_size=128)

model.fit_generator(datagen.flow(train_x, train_y, batch_size=128), epochs=5)

loss, acc = model.evaluate(test_x, test_y)

print("loss=",loss)

print("acc=",acc)

y_ = model.predict(test_x)

predicted = np.argmax(y_, axis=1)

print(predicted)

| Layer (type) | Output Shape | Param # |

| conv2d_4 (Conv2D) | (None, 30, 30, 32) | 896 |

| max_pooling2d_4 (MaxPooling2D) | (None, 15, 15, 32) | 0 |

| conv2d_5 (Conv2D) | (None, 13, 13, 64) | 18496 |

| max_pooling2d_5 (MaxPooling2D) | (None, 6, 6, 64) | 0 |

| flatten_2 (Flatten) | (None, 2304) | 0 |

| dense_6 (Dense) | (None, 10) | 23050 |

| dense_7 (Dense) | (None, 10) | 110 |

| dense_8 (Dense) | (None, 10) | 110 |

|

Total params: 42,662 Trainable params: 42,662 Non-trainable params: 0 |

||

데이터 증강을 하기 전고 비교하면 loss 더 작고, acc가 높아졌다. 성능이 향상되었다.

- 증강 전 : loss: 1.7496 - acc: 0.3587

- 증강 후 : loss: 1.5547 - acc: 0.4406

하지만 실행 시간은 epoch당 12초에서 15초로 증가하였다.

'Deep learning > Code' 카테고리의 다른 글

| object_detection_yolo_darknet (0) | 2020.01.22 |

|---|---|

| 2020_01_22 VGG16 (0) | 2020.01.22 |

| 2020_01_21 CNN (0) | 2020.01.21 |

| 2020_01_21 IRIS (0) | 2020.01.21 |

| 2020_01_20 DL(Keras) (0) | 2020.01.20 |